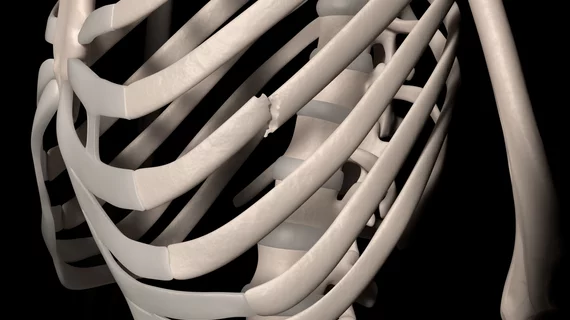

Research published Tuesday in Radiology found comparable performance between artificial intelligence and clinicians for fracture detection.

Experts examined 42 studies that compared the diagnostic performance of clinicians and AI in fracture detection and found no statistical differences in sensitivity between the two. In fact, a difference of only 1% in sensitivity was observed.

“We found that AI performed with a high degree of accuracy comparable to clinician performance,” lead author Rachel Kuo, from the Botnar Research Center, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences in Oxford, England, and co-authors discussed. “Importantly, we found this to be the case when AI was validated using independent external datasets, suggesting that the results may be generalizable to the wider population.”

The team’s meta-analysis of peer-reviewed publications included studies that developed and/or validated AI algorithms for the specific purpose of fracture detection. This included more than 55,000 images from 37 studies that used radiographs and five that used computed tomography scans to identify fractures.

For the internal validation sets in those studies, the pooled sensitivity and specificity of AI was 92% and 91%, compared to 91% and 92% for clinicians. These numbers were similar in the external validation sets for both readers as well.

The experts noted that their findings point to a promising future for educational and clinical application of AI. In addition to improving workflows, AI could help to reduce the rate of early fracture misdiagnoses in emergency settings, especially in circumstances when patients have sustained multiple fractures.

“The results from this meta-analysis cautiously suggest that AI is noninferior to clinicians in terms of diagnostic performance in fracture detection, showing promise as a useful diagnostic tool,” the authors wrote. “Current artificial intelligence is designed as a diagnostic adjunct and may improve workflow through screening or prioritizing images on work lists and highlighting regions of interest for a reporting radiologist. AI may also improve diagnostic certainty through acting as a second reader for clinicians or as an interim report prior to radiologist interpretation.”

The researchers did note, however, that AI is not a replacement for clinicians, and that readers must still practice caution when applying algorithms as an adjunct.

“It remains important for clinicians to continue to exercise their own judgment,” Kuo said. “AI is not infallible and is subject to bias and error.”

More on orthopedic imaging:

Radiomics-clinical model accurately predicts osteoporotic spinal fracture timeline on CT images

FDA greenlights AI software that detects fractures and traumatic injuries

Patient discussions—not X-ray results—should guide common forearm fracture treatment in older adults

AI helps radiologists and non-specialists detect fractures on X-rays, but experts remain dubious