Reporting times and workloads were reduced with a deep learning framework trained to recognize abnormalities on MRI scans of the brain, according to a new study.

A shortage of radiologists combined with an increased demand for medical imaging has created a delay in the amount of time it takes to complete image interpretations and reports. In circumstances when acute conditions require prompt attention, this issue becomes especially problematic.

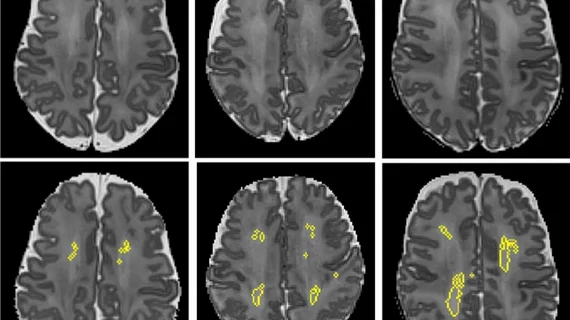

Researchers from King’s College London proposed the use of a deep learning framework based on convolutional neural networks as a possible solution to the reporting time setbacks. The results of their research were recently published in Medical Image Analysis.

“Potentially, computer vision models could help reduce reporting times for abnormal examinations by flagging abnormalities at the time of imaging, allowing radiology departments to prioritize limited resources into reporting these scans first,” corresponding author Thomas C. Booth, with the school of biomedical engineering and imaging sciences at King's College London, and co-authors suggested.

To examine their theory more closely, the researchers conducted a simulation study using retrospective data from the images of routine hospital-grade axial T2-weighted and axial diffusion-weighted head MRI scans. Their models were trained using neuroradiology report classifiers to create a labelled dataset of 70,206 exams from two U.K. hospital networks.

The axial T2-weighted scans alone yielded accurate classifications (normal or abnormal) and generalizations on all training and testing combinations. When an ensemble model that incorporates and averages the results of the T2-weighted and the diffusion-weighted scans was used, the performance was slightly better.

In addition to the accurate classifications, the researchers’ model was also able to reduce report wait times for patients with abnormalities from 28 days to 14 days and from 9 days to 5 days for the two hospital systems.

“We demonstrated accurate, interpretable, robust and generalizable classification on a hold-out set of scans labelled by a team of neuroradiologists and have shown that the model would reduce the time to report abnormal examinations at both hospital networks,” the experts explained.

The results of the study represent the framework’s viability in a clinical setting and could contribute to cost reduction and better overall outcomes for patients with abnormal imaging, the authors implied.

You can view the detailed research in Medical Image Analysis.

More on artificial intelligence:

AI can identify significant findings on scanned radiology reports, reduce manual workloads

Multimodal AI platform can accurately diagnose and stage thyroid cancer via ultrasound images

Combining neural network with breast density measurements boosts interval cancer detection rates